Advertisement videos serve as a rich and valuable source of purpose-driven information, encompassing high-quality visual, textual, and contextual cues designed to engage viewers. They are often more complex than general videos of similar duration due to their structured narratives and rapid scene transitions, posing significant challenges to multimodal large language models (MLLMs). In this work, we introduce VideoAds, the first dataset tailored for benchmarking the performance of MLLMs on advertisement videos. VideoAds comprises well-curated advertisement videos with complex temporal structures, accompanied by manually annotated diverse questions across three core tasks: visual finding, video summary, and visual reasoning. We propose a quantitative measure to compare VideoAds against existing benchmarks in terms of video complexity. Through extensive experiments, we find that Qwen2.5-VL-72B, an opensource MLLM, achieves 73.35% accuracy on VideoAds, outperforming GPT-4o (66.82%) and Gemini-1.5 Pro (69.66%); the two proprietary models especially fall behind the opensource model in video summarization and reasoning, but perform the best in visual finding. Notably, human experts easily achieve a remarkable accuracy of 94.27%. These results underscore the necessity of advancing MLLMs’ temporal modeling capabilities and highlight VideoAds as a potentially pivotal benchmark for future research in understanding video that requires high FPS sampling.

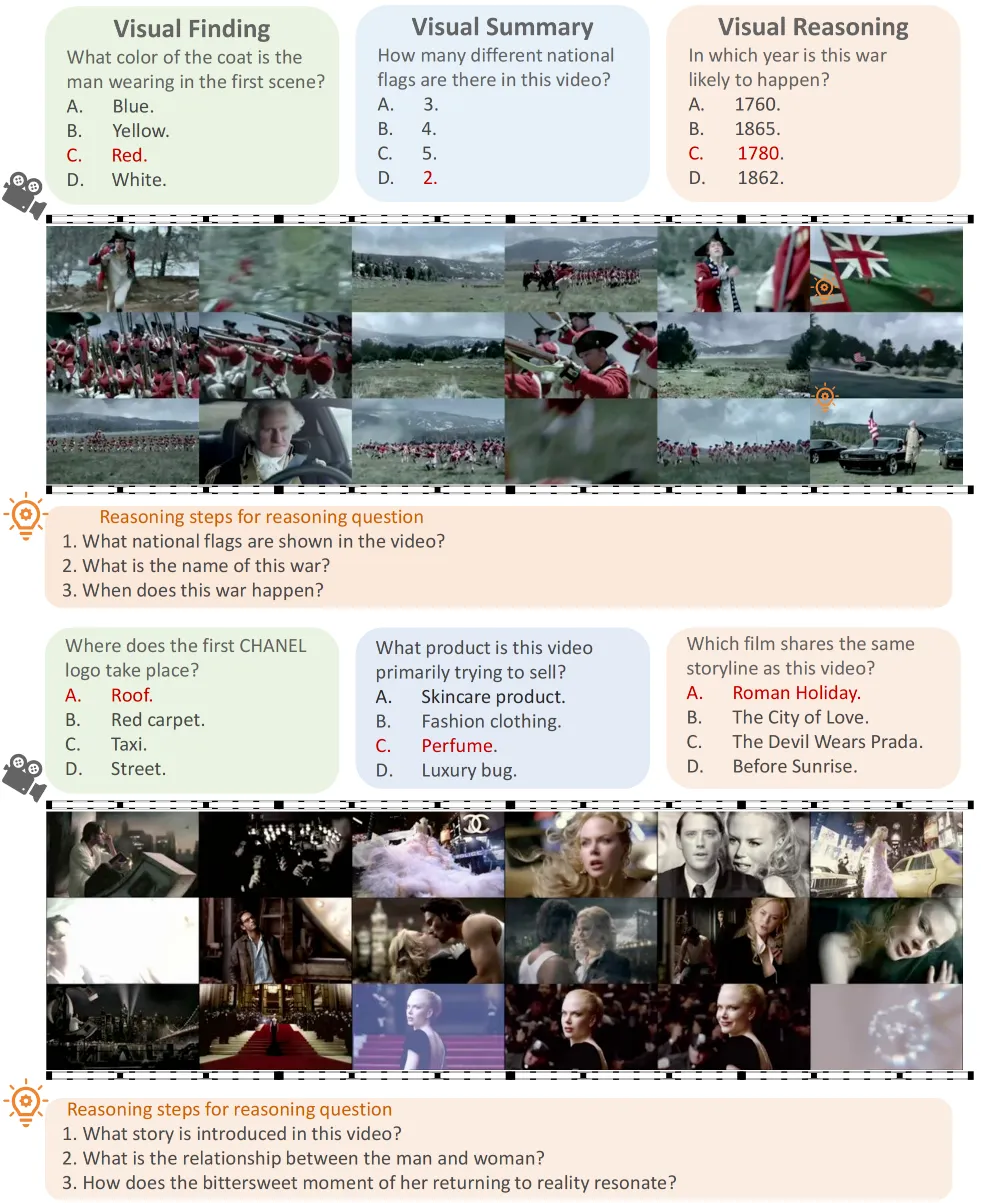

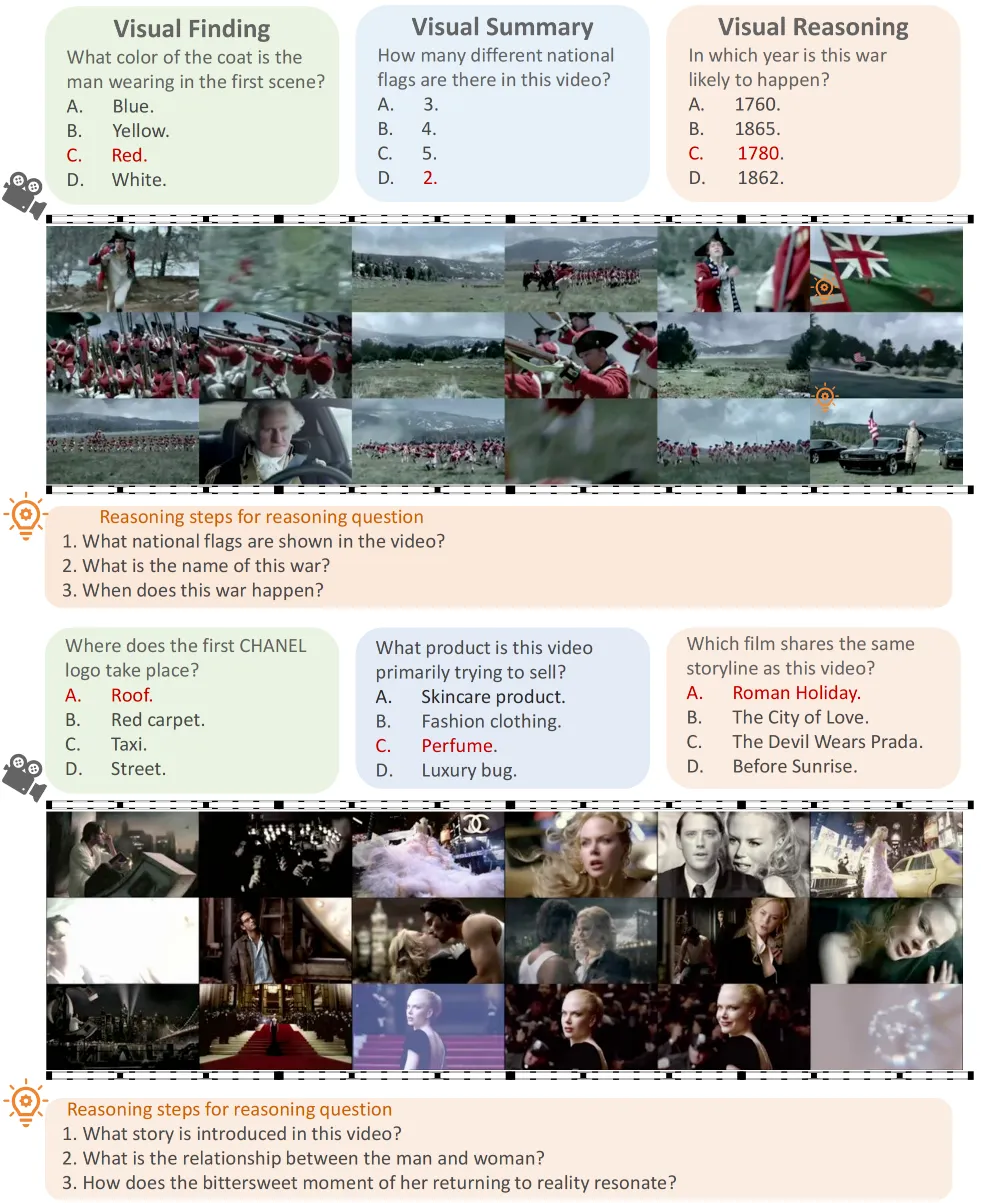

VideoAds comprises three challenging tasks: Visual Finding, Visual Summary, and Visual Reasoning, specifically designed to evaluate MLLMs’ temporal reasoning capabilities on videos with complex temporal structures that have never been investigated before. Unlike many previous datasets that focus on recognizing isolated actions or events, VideoAds demands that models derive the correct answers only through multistep reasoning over multi-modal visual clues.

The comparison of various minutes-long video benchmarks: total number of videos (#Videos), number of clips (#Clips), average duration of the videos (Len.), number of QA pairs (#QA Pairs), method of annotation (Anno., M/A means the manually/automatic manner), average number of QA-pair tokens (QA Tokens), complexity duration (Complexity Duration), and video complexity (Video Complexity).

@article{zhang2025videoads,

title={VideoAds for Fast-Paced Video Understanding: Where Opensource Foundation Models Beat GPT-4o \& Gemini-1.5 Pro},

author={Zhang, Zheyuan and Dou, Monica and Peng, Linkai and Pan, Hongyi and Bagci, Ulas and Gong, Boqing},

journal={arXiv preprint arXiv:2504.09282},

year={2025}

}